Note: Making modifications to the backlog database may lead to inconsistent behavior in ODX. Do not make any modifications to this file unless TimeXtender Support gives specific instructions to do so.

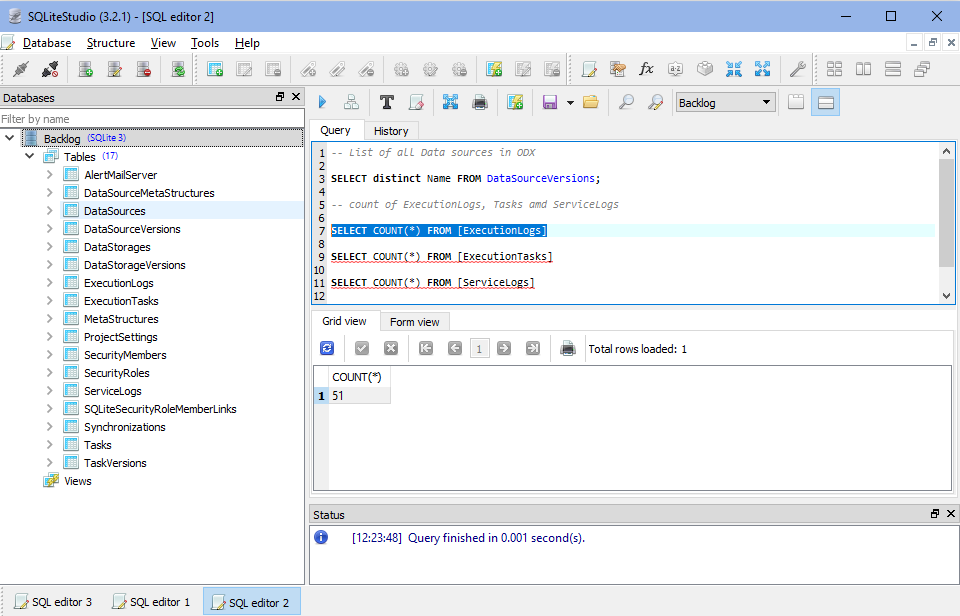

The ODX server uses a local backlog (SQLite database file) to keep meta data about data sources, storage and tasks etc. This backlog is continuously synced with the cloud environment. Analyzing the backlog may help troubleshoot some performance issues.

Note: Only metadata is stored in the backlog or cloud environment. Tasks, data source configuration, incremental load settings, table/field selection etc. The connection strings (user names, passwords etc.) are all encrypted.

Download SQLite Studio for Windows (note: this is a 3rd party, open source tool)

Decompress the .zip file and run SQLiteStudio.exe

Navigate to the ODX backlog folder

In ODX 20.5 and earlier versions, the file is stored in:

C:\Program Files\TimeXtender\ODX <version>\BACKLOG\Backlog.sqlite

In ODX 20.10 and later versions, the file is stored in:

C:\ProgramData\TimeXtender\ODX\<version>\BACKLOG\Backlog.sqlite

Make a (backup) copy of Backlog.sqlite file (give it a descriptive name like Copy_Backlog.sqlite)

Note: Do not make extra connection to Backlog.sqlite file in use, since it may interfere with ODX activity in progress. For analytics, always make a copy of the current backlog file and use the copy for analysis.

From SQLiteStudio UI, Database menu -> Connect to the database (e.g. the Copy_Backlog.sqlite file)

Useful Queries

Once you have successfully connected to the ODX Backlog SQLite file, you can download and open the below queries to retrieve useful info from the metadata database.

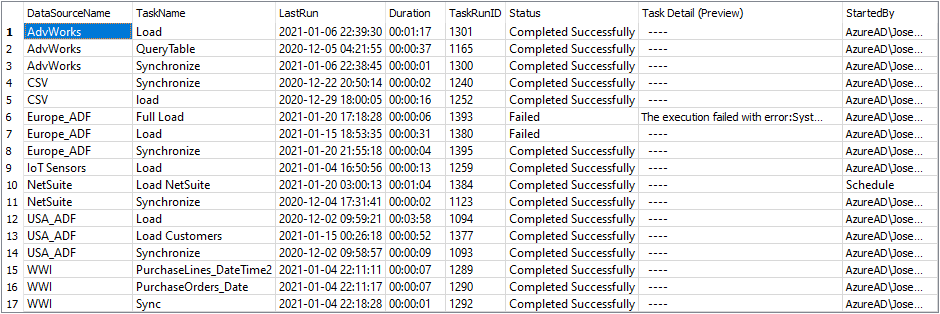

Task Last Run Status

Download TaskLastRunStatus.sql query

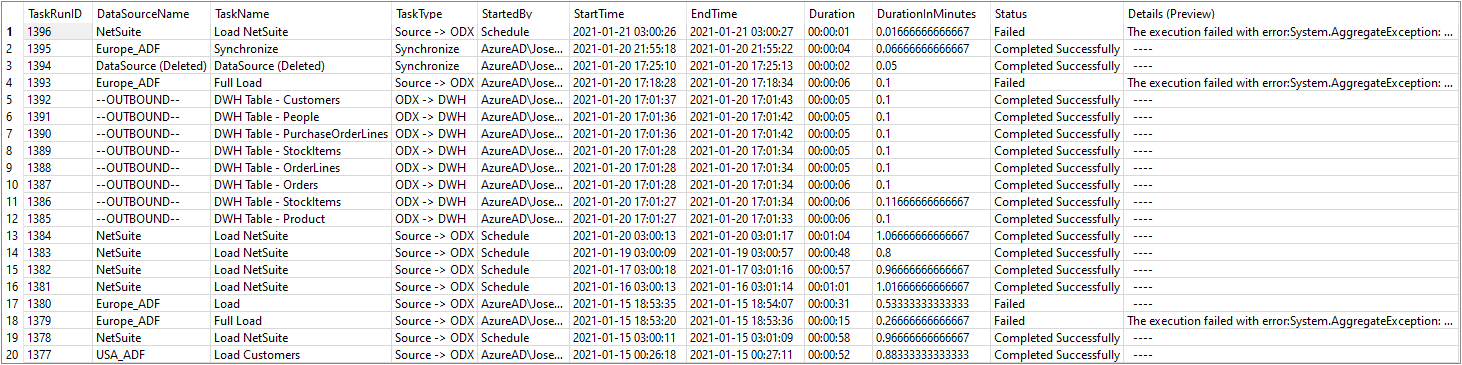

Task Execution History

Download TaskExecutions.sql query

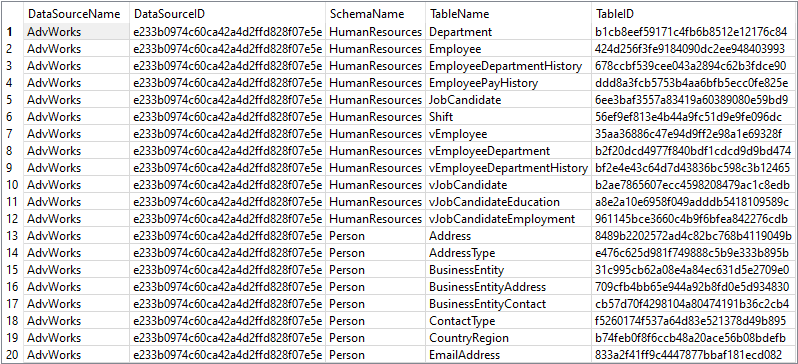

List of Data Source Tables

Download DataSourceTables.sql query

10 Comments