This feature relates to transfer from ODX to DWH with ADO.Net transfer not Azure Data Factory.

Reading parquet files can be a high memory consuming task. When transferring data from Azure Data Lake to a data warehouse, some users experience the following error:

System.OutOfMemoryException: Exception of type 'System.OutOfMemoryException' was thrown

The Operational Data Exchange (ODX) comes with an option to limit the memory used when transferring data with the internal transfer method.

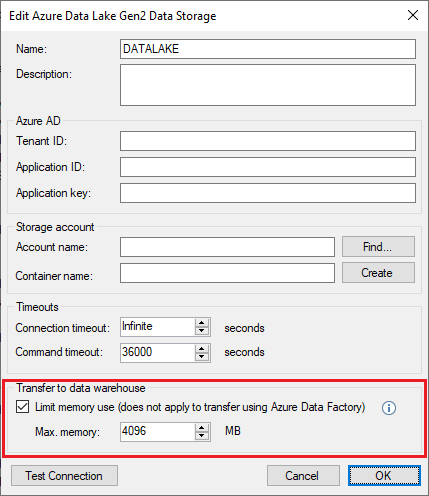

The option is found in the “Azure Data Lake Gen2 Data Storage” dialog:

When using this option, the extraction will be done by grouping the columns in chunks that do not exceed the limit set. If a column exceeds the memory limit by itself, it will still be transferred. In the case that the limit is exceeded for one or more columns there will be a warning log for the outbound transfer.

Log example:

The column dbo.Customers.Description exceeds the maximum threshold. Column size: 80165239 Current maximum: 1 MB

The calculation of how the transfer will done is only calculated on the first file for the transfer and all following files will follow this pattern.

The “Limit memory use” option will be used when the first file contains one or more row groups that exceeds the “Max. memory” value. When this is the case the columns are grouped by the principles of the BinPack algorithm. The first column group will also contain system fields.

Each column group will have a correspondent table in the destination SQL Server database.

The tables are identified with the prefix “ODX_TEMP_” and postfix “_pgc“ followed by the column group number.

Besides the column values, each table contains a column named _pcgRecId_” which is the data row identifier.

The column group tables are joined together in a view using the “_pcgRecId_” column. The view is identified by the postfix “ODX_TEMP_“ and postfix “_pgc”.

The tables and view are dropped right before TimeXtender completes the transfer step. In case of a failure in the data transfer the tables and view are dropped right before the next transfer of the table.

Before the transfer starts, information about how the transfer is going to happen is logged.

Log example when memory limit is not exceeded:

First file size :419,558170318604 MB

Row group count: 4 max. row group size: 644890641 bytes

Column groups:

Group 0 size: 266223246 bytes

Columns: [CustomerId], [Type],[Name],[Lastname]

Group 1 size: 263859172 bytes

Columns: [Description],[CreatedDate]

Group 2 size: 114808223 bytes Columns: [ModifiedDate], [CustomerGroup]

Log example when memory limit is not exceeded:

dbo.Customers does not exceed maximum memory limit. 'Limit memory use' logic will not be applied. Max. row group size: 148906 bytes.

1 Comments