Note: There is an updated version of this article, which applies to the next generation of TimeXtender.

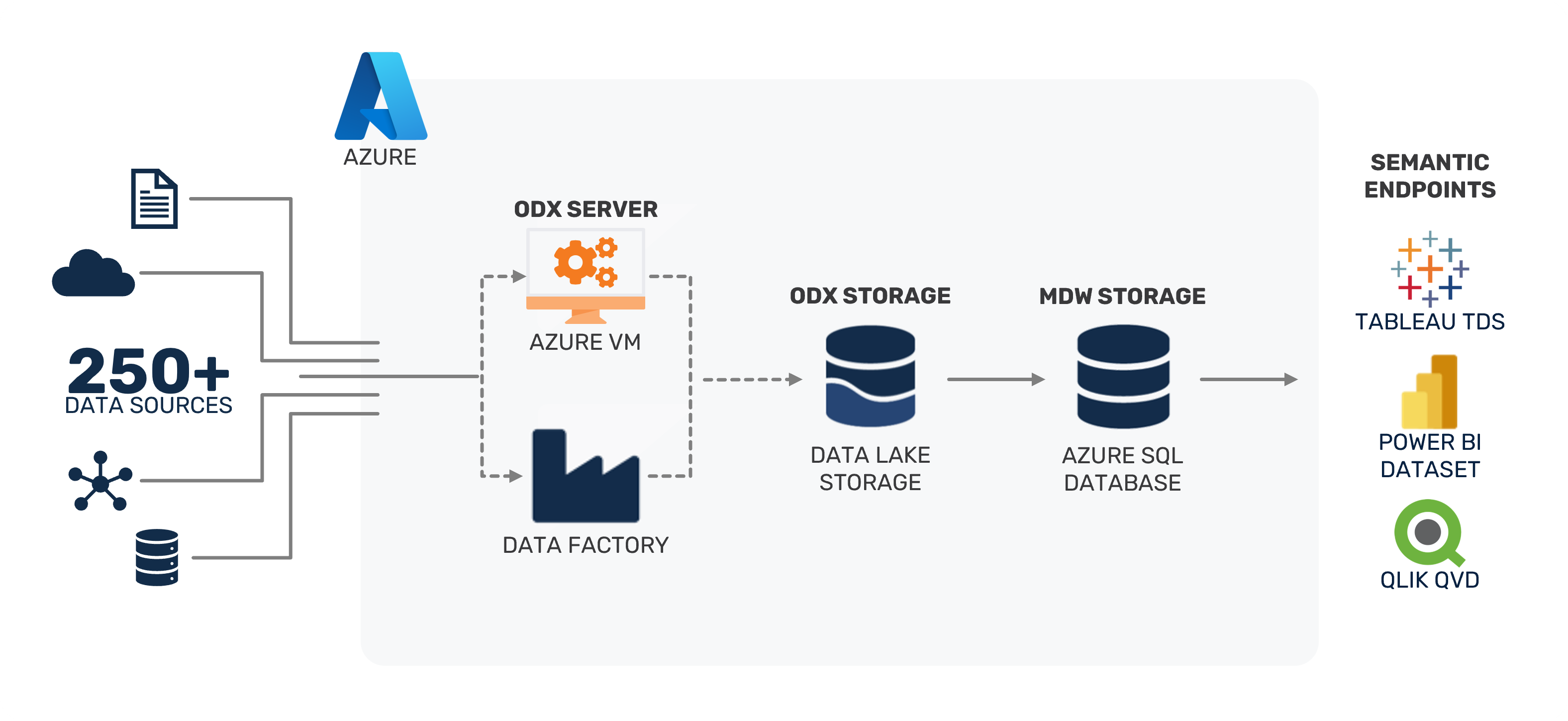

This is a standard reference architecture to implement TimeXtender fully in Azure, the goal is to balance performance and cost, when working in the cloud.

To prepare your TimeXtender environment in Azure, here are the steps we recommend.

- Create Application Server - Azure VM

- Create ODX Storage - Azure Data Lake Storage Gen2

- Prepare for Ingest and Transport - Azure Data Factory (recommended)

- Create MDW (and DSA) Storage - Azure SQL DB

- Configure PowerBI Premium Endpoint (Optional)

- Estimate Azure Costs

|

1. Create Application Server - Azure VM

|

| To serve the TimeXtender application in Azure, we recommend using an Azure Virtual Machine (VM), sized according to your solution's requirements. |

| Guide: |

Create a TimeXtender Application Server in Azure

|

| Considerations: |

- Recommended Sizing: DS2_v2 (for moderate workloads)

- If Azure VM (App Server) serves the ODX Server, it must remain running for TimeXtender to run.

- This VM will host the services to run TimeXtender.

- ODX Service

- Scheduler Service

- Server Services

|

|

2. Create ODX Storage - Azure Data Lake Storage Gen2

|

| ADLS Gen2 is highly performant, economical, scalable, and secure way to store your raw data. |

| Guide: |

Use Azure Data Lake Storage with TimeXtender

|

| Considerations: |

- When creating the ADLS Gen2 data lake service, you must enable Hierarchical Namespaces

- TimeXtender writes files in Parquet file format, a highly compressed, columnar storage in the data lake.

- It is possible for ODX Server to store data in Azure SQL DB, but this adds cost and complexity but no additional functionality

- When using Azure Data Lake for ODX and SQL DB for the Data Warehouse, it is highly recommended to use Data Factory to transport this data.

- ADLS will require a service principle, called App Registration in Azure, for TimeXtender to access your ADF service.

- Both Data Lake and ADF, may share the same App Registration if desired.

|

|

3. Prepare for Ingest and Transport - Azure Data Factory (optional)

|

| For large data movement tasks, ADF provides amazing performance and ease of use for both ingestion and transport. |

| Guide: |

Use Azure Data Factory for Data Movement TimeXtender v20

|

| Considerations: |

- When creating ADF resources use Gen2, which is the current default

- A single ADF service can be used for both transport and ingestion

- Ingestion from data source to ODX Storage

- Transport from ODX to MDW

- The option to use ADF is not available for all data source types, but many options are available.

- ADF Data sources do not support ODX Query Tables at this time.

- ADF's performance can be quite costly for such incredible fault-tolerant performance

- ADF will require a service principle, called App Registration in Azure, for TimeXtender to access your ADF service.

- Both Data Lake and ADF, may share the same App Registration if desired.

|

|

4. Create MDW Storage - Azure SQL Database

|

| With its ability to auto-pause, Azure SQL Single DB Serverless is a great, potentially cost-saving option for the data warehouse storage, both Modern Data Warehouse (MDW) and Data Staging Area (DSA). |

| Guide: |

Use Azure SQL Single DB with TimeXtender

|

| Considerations: |

- Recommended SQL Single DB (vCore - General Purpose) Sizing:

- Serverless - Min 10 vCores - Max 10 vCores

- Data Max Size - 50 GB

- When Serverless is conducting load balancing, it will drop connections, therefore, when using Serverless for your data warehouse, you want to set the Min vCores and Max vCores to the same level.

- The Serverless compute tier can be a cost saving option, if you do not require your database to be online more that 50% of the time. If you do require >50% uptime for the Data Warehouse, then the Provisioned compute tier will be more economical.

- Azure SQL Single DB cannot communicate with other databases, by design.

- If using a Data Staging Area (DSA) in your solution, you can use the same SQL DB, but must separate by schema, such a 'etl' and 'dbo'

|

|

5. Configure PowerBI Premium Endpoint (Optional)

|

| If you have Power BI Premium, TimeXtender and deploy and execute Semantic Models directly to the Power BI Premium endpoint. |

| Guide: |

Power BI XMLA Endpoint

|

|

6. Estimate Azure Costs

|

| Balancing cost and performance requires monitoring and forecasting of your services and needs. |

| Guide: |

Azure Pricing Calculator*

|

| Considerations: |

- Azure provides a pricing calculator to help you estimate your costs for various configurations.

*Please note, this Azure pricing calculator does not include the price of the TimeXtender License or Consulting services.

|

5 Comments