Note: There is an updated version of this article, which applies to the next generation of TimeXtender.

Using Azure data factory for data movement is ideal for large data sets. By using Azure Data Factory the data is transferred directly from source to target and therefore does not rely on the limited resources of the application server.

TimeXtender can use Azure Data Factory (ADF) to move data in the following two ways:

- Source to ODX

- ODX to Data Warehouse

The following guide will show you how to:

- Create an Azure Data Factory Resource

- Transfer from Source to ODX using Azure Data Factory

- Transfer from ODX to Data Warehouse using Azure Data Factory

Create an Azure Data Factory Resource

Note: If you have already created a Data Factory you wish to use, you can skip this step.

- Azure Portal -> Create a new Resource -> Data Factory -> Create

- Select Version = V2

- Assign Subscription name, Resource Group, and Location.

- Git is not required so you can disable this.

- Once deployed, please note the following properties of the Data Factory which will be needed later:

- Azure Data Factory Name

- Subscription ID

- Resource Group Name

Create an App Registration

In order to access the data factory resources from TimeXtender, you will need to configure an App Registration in the Azure portal.

- In the Azure Portal menu, click on Azure Active Directory, then click on App Registrations in the menu bar on the left. Then click New Registration.

- Enter a name and select Accounts in this organizational directory only. The value of Redirect URI is the URL at which your application is hosted. Click Register when you are done.

- For the newly added App Registration, select Certificates & secrets to create a New Client Secret. This key is encrypted after save, so it needs to be documented somewhere safe. The secret will appear after you click Add.

- Please note the following properties of the App Registration which will be needed later:

- Azure Tenent ID

- Application ID

- Client Secret (Application Key)

Enable App Registration access to Data Factory

After the App Registration is created, you need to configure access to Data Factory.

Note: The following steps for access control describe the minimum permissions required in most cases. In your deployment/ production, you may fine-tune those permissions to align with your business rules and compliance requirements. Refer to Microsoft Azure documentation for details.

- Go back to the resource group where your data factory resource is located and select the Data Factory resource.

- In the menu bar on the left, select Access Control (IAM) and add a role assignment.

- Add the <App Registration Name> you just created to the role of Data Factory Contributor of the resource.

Note: When you add or remove role assignments, wait for 5 minutes before executing an ODX task. It can take up to 30 minutes for changes to take effect. For more details, review this article Troubleshoot Azure RBAC

Transfer Data from Source to ODX using Azure Data Factory

- Open your ODX server in a tab

- Right click Data Sources and click Add Data Source...

- On the first page, enter a Name and (optional) Description for your data source and click Next

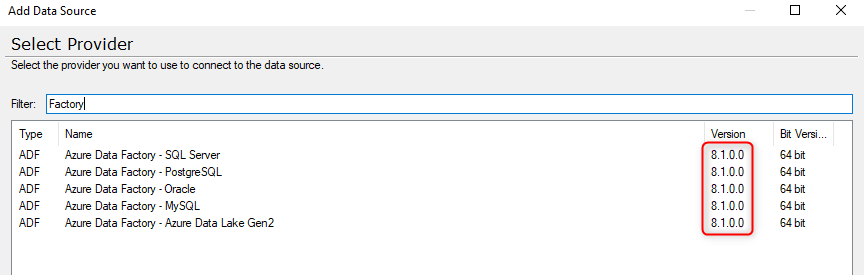

- On the Provider page, select one of the following Azure Data Factory data sources provider and click Next

- On the Connection Info page, enter the below information and click Next.

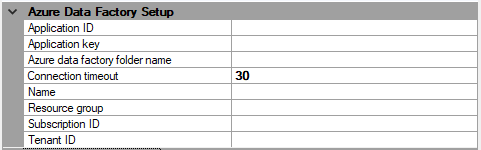

- Azure Data Factory Info

- Azure AD App Registration created in the above section

- Application ID

- Application Key (Client Secret)

- (Optional) Azure Data Factory folder name - pipelines and datasets will be placed in this folder in ADF.

- Azure Data Factory Name

- Resource Group

- Subscription ID

- Azure Tenant ID

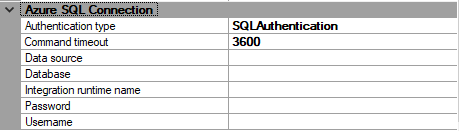

- Azure SQL connection

- The SQLAuthentication Authentication type is the only available option

- The Command timeout is in seconds, so the default is 1 hour.

- The Data source is the fully qualified server name of the data source - This is the connection property used by Azure Data Factory when extracting data and when synchronizing metadata (Schemas, Table Names, Field Names & Data Types).

- The Database name you want to connect to on the data source

- Integration runtime name: This is only required when connecting to On-Premise data sources. If using this option, you must also Configure a Self-Hosted Integration Runtime.

- The Username is the SQL authentication user name

- The Password is the password of the above user account

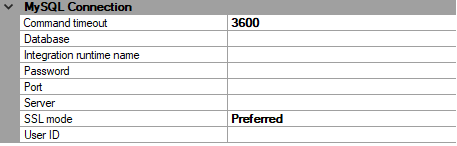

- MySQL Connection

- The Command timeout is in seconds, so the default is 1 hour.

- The Database name you want to connect to on the server

- Integration runtime name: Same as above this is only required when connecting to On-Premise data sources.

- The Password is the password of the user account

- The default Port is 3306

- The Server is the location where the MySQL is running

- SSL mode

- The User ID is the account that as minimum have read access to the database.

- Oracle Connection

- The setup fields necessary are the same as what is in the normal Oracle guide for the EZ connect.

- Oracle guide

- Only difference is the Host is the Server in the other guide.

- Azure Data Factory Info

Continue setting up the ODX data source as usual.

Transfer from ODX to Data Warehouse using Azure Data Factory

Note:

This option is NOT supported when using Azure Synapse SQL Pool (SQL DW)

You MUST configure a separate Azure Data Factory resource for each environment

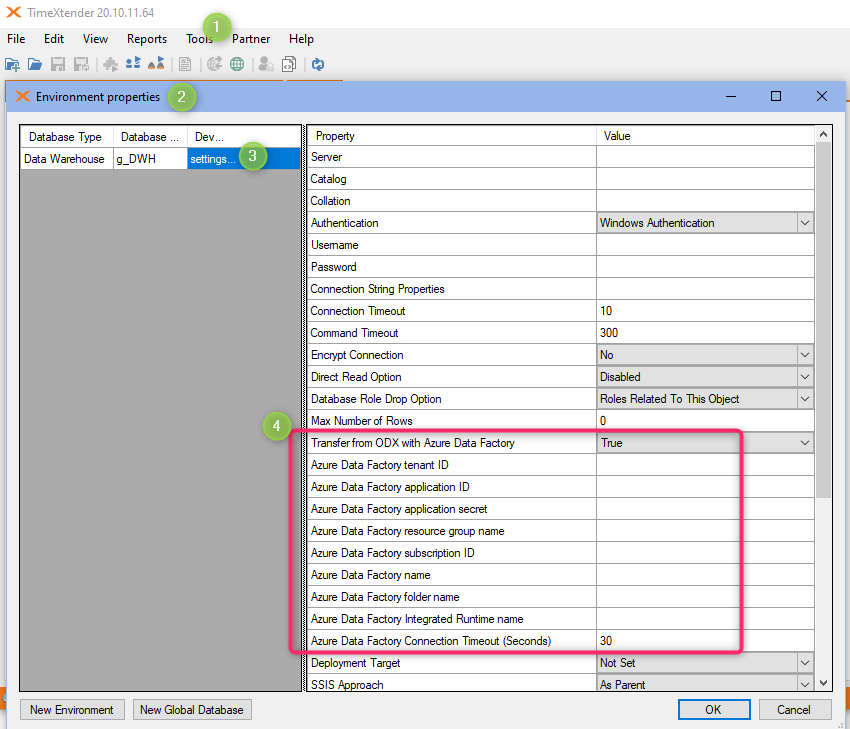

- Go to Tools menu -> Environment Properties -> Settings

- Set "Transfer from ODX with Azure Data Factory" = True

- Specify required properties for Data Factory

Troubleshooting

1. Connectivity Error:

A. System.Data.SqlClient.SqlException: A network-related or instance-specific error occurred while establishing a connection to SQL Server. The server was not found or was not accessible

Important: The data source needs to be accessed by both an Azure Data Factory and the ODX. If you receive an error, it is important to isolate which component is unable to access the data source.

If you make changes to Data Lake or Data Factory configuration Access Control (IAM) and role assignment etc.

- Wait until the changes take effect in Azure ( Troubleshoot Azure RBAC )

- Execute Task and verify it succeeds

- Preview Tables in Data Factory to verify it is working OK

- Synchronize objects in ODX (if you made changes in Data Source)

- Preview Tables in ODX to verify the Data Lake setup is working OK

- Deploy and Execute in TimeXtender

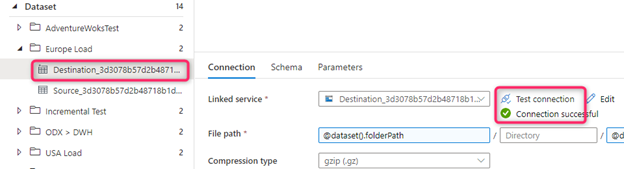

B. Take a look at your Azure Data Factory settings, specifically in the Author pane, under the Datasets section, look for the "Destination" dataset created for this data source. Should look something like this:

Try and click "Test connection" to see if that works.

2. Self-Hosted Integration Runtime issues

Verify the Self-Hosted Integration Runtime is installed and running.

Study this detailed troubleshooting guide:

Troubleshoot self-hosted integration runtime in Azure Data Factory

Review your Azure Data Factory settings, specifically in the Author pane -> monitor -> pipeline runs.

Under Activity runs, review the Error column to display the activity logs (if any).

3. Failed to get access token by using service principal .. invalid client secret

Review your Azure Data Factory settings, specifically in the Author pane, under the Datasets section, look for the "Destination" dataset created for this data source.

Resolve the access token error, then click "Test connection" to ensure it works.

4. Failed to get access token by using MSI authenticator

Failed to get access token by using MSI authenticator.

Acquire MI token from AAD failed. ErrorCode: invalid_client

A configuration issue is preventing authentication

Check to see if SQL authentication password has changed. Update and test the linked service in ADF setup.

Manage -> Linked Services, review SQL Server connection

5. Cannot set the AccessToken property if the 'Integrated Security' connection string keyword has been set to 'true' or 'SSPI'

System.Exception: Error executing meta data pipeline: { "errorCode": "2200", "message": "ErrorCode=SqlFailedToConnect,'Type=Microsoft.DataTransfer.Common.Shared.HybridDeliveryException,Message=Cannot connect to SQL Database: '.', Database: '<ODX_Storage_SQL database name>', User: ''. Check the linked service configuration is correct, and make sure the SQL Database firewall allows the integration runtime to access.,Source=Microsoft.DataTransfer.ClientLibrary,''Type=System.InvalidOperationException,

Message=Cannot set the AccessToken property if the 'Integrated Security' connection string keyword has been set to 'true' or 'SSPI'.

If your ODX storage is on a SQL server, change its connection from "Windows authentication to "SQL authentication" with a user and password.

1 Comments